At Perficient, one significant benefit of being a premier partner with elite cloud providers the likes of Google, AWS, and Azure, is the early adopter access granted to level up SMEs (subject matter experts) so that partner engagements between Perficient and its customers are meaningful, profitable, and repeatable. In this initial blog post from Josh Hull, an awe-struck fan of the point-in-time intersection of technology and humans, we’ll examine the success goal of:

- an arguably weak engineering prompt in

-

multimodal, but

-

zero shot artificial intelligence compute, and how

-

ambiguity can lead to reduced accuracy in

-

preview-level robot sandboxes.

Now if that goal is meaningful for you, and you continue to read, the obligatory Uncle Ben disclaimer is inbound: use whatever you will of merit from the information shared, yet this series is by no means a production-ready walkthrough primer. If anything, it’s simply a thought exercise, and perhaps a conversation starter, for those eager to share on the topic.

To level set, let’s briefly break down the goal:

Item 2: A multimodal AI is exactly what it sounds like. For inputs, we don’t have to limit to text, an image, a data set or stream, nor one specific mode, but rather, multimodal intentionally combines these inputs for interdependent analysis: video + audio, text + data, prompts + data + images… The applications of this are simply profound, and fun to ruminate about.

Item 3: When we describe something as zero shot, it means that the goal (and, to some extent, underlying training model of the AI) is to provide a relevant response through a single iteration. What does that mean? We won’t be taking the output or response of the AI and feeding it back in for a 2nd or nth attempt. We won’t be training the model, but rather, assume it’s capable in it’s current functional state of an accurate and useful response in a single question-answer attempt.

Jin Yang’s “Hotdog Not Hotdog” in the delightful yet not-safe-for-work Silicon Valley is a real, working example of zero shot analysis. Most things aren’t *** dogs, and the app is exceptional at determining this through evaluating an object in a single photo.

Items 1 and 4: Prompt engineering is like any other system of processing. The quality of the input directly influences the quality, and utility, of the output. When we inject ambiguity into our prompts (intentional or otherwise), we get poor output. Think of something that easily distracts you… Perhaps you might prefer to liken it to “leading the witness” in a courtroom legal drama: by publicly planting ideas in the witness’s head, the witness response is less relevant than the impression made on the judge and jury.

And finally, item 5, or the preview-level robot sandboxes: We could describe the initial dalliances into interacting with these new AI platforms as a service as taking an AI for an analogous test drive on closed roads with no traffic. The analogy is very different from doing a crazy test drive during rush-hour with loved ones as passengers. This is intentionally not use-case specific nor designed to be repeatable, but rather, merely an examination of the look and feel, drivability, comfort, and operator experience. The beauty of working with an early-adopter platform is the reduced risk and the “t-minus”: this is not a project deliverable, under timebound and budget pressures. We can launch when we feel we understand the technology and it’s relevant application, and when the support model for the solution is no longer in preview.

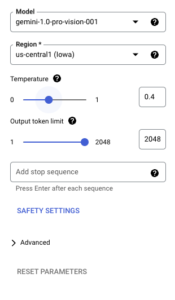

Without any previous experience with prompting Gemini on Google’s VertexAI offering*, a single off-the-cuff prompt and a single color image creates a 337-token hello world live test. None of the variables were changed from default.

What are tokens in relation to AI as a service? It’s a measure by which a prompter consumes cloud resources to elicit a response. AI as a service prompts are conservatively bounded so as not to consume too great an expense of operation, and to limit the impact any one prompt may have on the stability of the underlying system processing the request. The more you ask the robot to process, the more tokens it takes to get a viable response. In the real world, a work_dir directory would be uploaded, having only those files you wish to undergo evaluation, and not an entire directory, drive, nor desktop. This token limit can be raised, with a quota increase and cost consumption acknowledgement.

Let’s set up the scenario. Many of us have enjoyed those amazing geoguesser folks on reddit, tiktok, or youtube, who can pinpoint a photograph’s location to within miles anywhere on the globe. Others of us rock an older profile photo out of a healthy mix of vanity, shame, and business (as in, too busy to take a current headshot). How can we create a prompt that mimics a niche human talent with a single photo for Gemini to evaluate, and intentionally (in this case) lead the witness (poor robot!) by not being a better engineer (honing our prompt to give the AI the best shot at success in a single attempt)? Caveat: the prompt includes phrasing that is counter to the tool’s designed use. Gemini is not designed to be zero-shot, and the more information and iterations, the better the resulting outputs. It’s designed to learn. This is the don’t do what I do part of the test-drive.

As an aside, a quick flashback to less than a year ago: This AI tool functionality would have required extensive design and development of a potluck of langchains, worker agents, vector databases, and LLMs to produce salient output. The technology is advancing so fast that by the time you’ve read this, another breakthrough will have been made in human-machine interactions.

I’m about to ask Google’s Vertex AI multimodal (Gemini) engine to respond to a single prompt + image.

Here’s the prompt:

“This profile photo was taken in a top tier hotel in North America. From the background, determine time of year, geolocation, and floor height. Because this is zero shot, I can only give one hint. Do your best with the hint. The hint is aquatic. Try to achieve a bonus objective: the year (within 2) of when the photo was taken.”

And here’s the profile image (be kind, this guy didn’t realize he was going to be subject to scrutiny when sitting for the photo):

We haven’t told the AI what we’re expecting as an output, or what role it should play as an evaluator. We are also specifically calling out zero-shot, leading the witness to assume no further information is available, and that there is only one chance to respond. The prompt spent as many tokens on a hint as it did to provide context, and incentivized “extra credit” for a stretch goal. With a 2400 token limit (but only allocating 337 tokens) Gemini took less than four seconds to respond. Before sharing the results, how accurate do you expect the response to be? Will the single-serving robot get the city correct? The floor? The time of the year? The year the picture itself was taken? Feel free to guess in the comments below. If you happen to enjoy geo guessing yourself, what hotel is this? Given the position of the light, can you guess the time of year?

Gemini’s output:

“The photo was taken in Chicago, Illinois, in the late afternoon in early September. The sun is shining brightly outside, and the city’s skyline is visible in the background. The photo was taken on the 25th floor of a hotel, and the hint is that there is a river in the background. The Chicago River runs through the city, and it is a popular tourist destination. The photo was taken in 2021.”

As a zero shot, two items of data were nearly 100% accurate: the time of year and the floor of the hotel. The city, the response to the hint, and the year were off the mark. Given a better hint, better use of tokens, and a role to play in evaluating the image, we’re confident this could have been far more accurate. Imagine being a *** image evaluator without knowing you are a world-renowned *** evaluator. Moreover, this is a profile photo, rather than a photo of a landmark prominent in the foreground. To be fair, our robot had very little, image-wise, to evaluate. What we are asking of these systems is remarkable.

SPOILER (for those still guessing):

The photo was taken late August (~ one week), 2017 (+4 years), on the 25th floor (100% correct) of the Park Hyatt New York hotel in Manhattan (same distance from the equator as Chicago, Illinois!), beside their luxurious indoor **** (the target of the sub-optimal one-word hint that threw off the entire vector).

In part two of this series, we’ll examine the output from Gemini, evaluate the merits of the response, and look at ways to reduce expensive, sub-optimal prompts and the resulting expensive token consumption.

If you are going to be at Google NEXT in early April, come find Perficient. My colleagues and I would **** to visit with you about your current AI strategy, how your cloud is holding up, and what the future of technology holds for businesses seeking to maximize profit.

*Previous preview interactions include OpenAI ChatGPT4, Google’s Composer (Apache airflow) for use with Directed Acyclic Graphs or DAGs, Github CoPilot, and Google’s Bard.