Posted by

Andreas Voniatis

APIs can often be quite intimidating for SEO professionals, especially for those more accustomed to working in Microsoft Excel. While Rank Ranger provides easy-to-follow documentation, I’ve written this guide to provide you with the Python code so that you can literally copy-paste the code to get started immediately.

The Rank Ranger API offers many capabilities from two main APIs:

- Reporting: managing and extracting data on clients you’re tracking data within your Rank Ranger account

- Search: competitive intelligence such as the SERPs with features

We’ll be focusing on getting data on your:

- Package ID number (required to get data from the rest of the API)

- Campaigns that you’re running

- Keywords (within a campaign)

- Rankings (for your keywords)

You’ll notice in each section there are quite a few steps that we explain to help you understand what the Python code is doing so you can learn a little Python on the way while appreciating mini-hurdles to be overcome in getting your data!

Preliminaries

You can assume that all of the Python code will be run within a Jupyter notebook locally on your machine, however, the code should also run in Google colab notebooks also.

We start by importing our libraries:

You’ll need your API token which is generated under Settings > API & Connected Apps to replace the fictitious API token below:

api_token = ‘12345-x1234abcd12345abcdefg0xyz1h2a3seo’

Package ID number

Once the Python library packages are imported and the api token variable is set, we’re ready to start using the API:

First we build and make the request of which the result is saved to an object called ‘r’. The request ‘r’ is the API URL we called includes the API URL, the ‘packages’ API endpoint (highlighted in yellow), the api key and the output which is set to json:

We print ‘r’ so we can see whether the API call managed to connect (or not):

print(r)

Below we get a 200 response which is a good sign:

<Response [200]>

packages_output = pd.read_json(r.text)

To make the json output parseable, we’ll convert it to a list using the to_list() function:

packages_list = packages_output[‘packages’].to_list()

Using print we can see what it looks like:

print(packages_list)

Example output is shown below with the package ID number highlighted:

Campaigns

Once you have your Package ID which in the above example is printed out as ‘87321’, you can start seeing what campaigns you have to extract data from. We’ll start by setting the packages_id variable:

packages_id = ‘87321’

Then we’ll build the request, this time using the ‘package_campaigns’ endpoint:

As before we’ll unpack the API response using read_json, convert to a list and print the results:

Again we have a fictitious list to demonstrate what the output would look like:

Note that the output lists all the campaigns by their campaign id, name, the domain and the search engine targeted.

Keywords

Let’s get the keywords from the Slate Safety campaign which will use the get_campaign_info API endpoint and the desired campaign id set below:

You’ll see from the keywords_output that you get all kinds of information such as the domain, number of keywords, the keywords themselves, domain, search engine etc:

As usual, we’ll convert the output to a list:

keywords_list = keywords_output[‘result’].to_list()

Then to get the keywords we simply need the 6th element of the list by passing in ‘5’. The reason we pass in 5 and not 6 is because Python uses zero-based indexing where 0 is counted as the 1st position in any list:

print(keywords_list[5])

The output shown below not only has the keywords but also any tags assigned to it. Note that the 6th element of the list is a dictionary which is one of the ways Python stores data. In this case, the dictionary key is ‘keyword’ and the value is a list of dictionaries (one per keyword):

Rankings

Luckily you don’t need the keywords to get your rankings. Unlike other rank tracker APIs I’ve worked with (of which their shame shall be spared by remaining nameless!), the Rank Ranger API only requires the:

-

Campaign ID -

**** -

Domain -

Search Engine ID

You can even request whether you’d like all the rank data per keyword or the highest rank per keyword using the include_best_rank parameter.

Let’s get the keyword rank data using the above to build the API request using the rank_stats API endpoint.

First, set the parameters:

Build and make the API request, before converting to a list:

And here is the result of which rank data for the first 4 keywords are shown:

Note that the URL data is actually the domain and that ‘lp’ (presumably landing page) is the ranking URL without the domain portion. You’ll need to combine both the URL and the LP dictionary keys to build the ranking URL which we’ll do in the code later on.

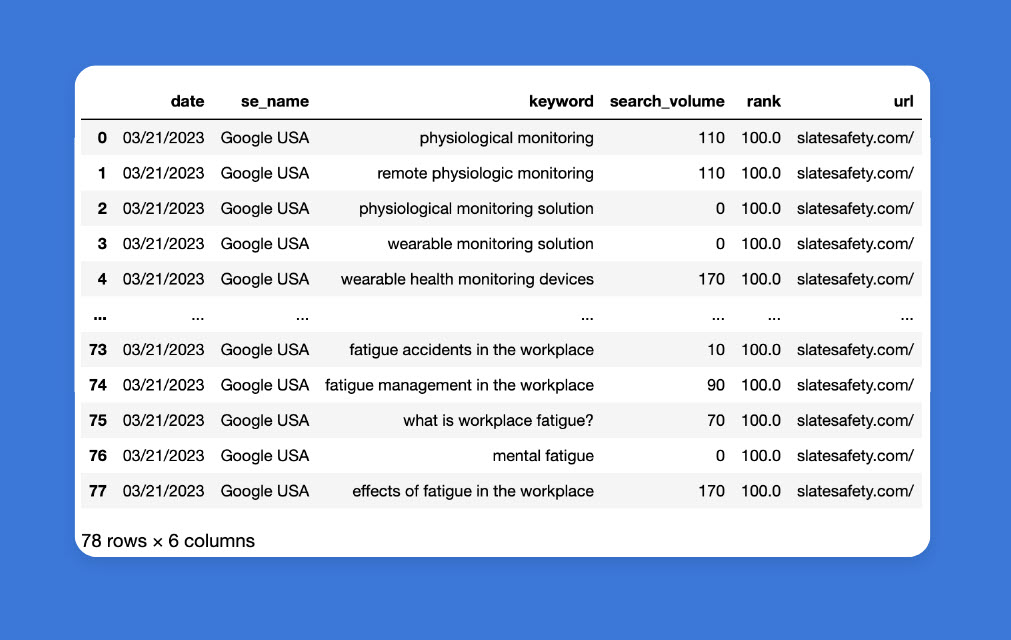

Obviously, the format of the rank data is not very useful to us if we want to export and create a report in Excel or just simply eyeball the data. So we’ll iterate through the dictionary above to create a dataframe. A dataframe is Python’s version of a data table which is akin to what you’d see in a spreadsheet i.e. rows and columns.

First, we’ll create an empty data frame called rankings_df, where all of the data will be stored:

rankings_df = pd.DataFrame(columns=[‘keyword’])

Set an empty list called rows_list which will contain all the rows of data as a list:

rows_list = []

We’ll then work through the rankings_list dictionary (extract printed above) to put the data into a list for an eventual data frame. The URL will be a combination of the URL and landing page. A for loop is a useful coding technique to avoid repeating lines of code, which in this case works by going through each item of rankings_list, indicating to Python which column to store the data, and then adding the result to rows_list:

Once the for loop has finished running (i.e. the code block above), rows_list will be passed into the Pandas DataFrame function to create rankings_df, our rank data table:

rankings_df= pd.DataFrame(rows_list)

The URL slug is wrapped in curly braces which we’ll want to replace:

rankings_df[‘url’] = rankings_df[‘url’].str.replace(‘{‘, ”)

rankings_df[‘url’] = rankings_df[‘url’].str.replace(‘}’, ”)

The rank column also returns ‘-‘ for keywords your site doesn’t rank for. The best response would be to replace this with a ‘100’ and convert the column type to numeric as shown below:

rankings_df[‘rank’] = rankings_df[‘rank’].str.replace(‘-‘, ‘100’)

rankings_df[‘rank’] = rankings_df[‘rank’].astype(float)

To print the data frame:

rankings_df

Now we have a data frame ready to export in CSV format using the Pandas to_csv() function:

rankings_df.to_csv(‘your_filename.csv’)

Taking it further

If you made it this far (without skimming), congratulations! We covered the basics of using Python to use the RankRanger API which can be used to help you:

-

Build custom reporting dashboards -

Extract data at scale for your SEO research

As mentioned, Rank Ranger has insight APIs that can tell you so much about the search intent of a search query which we’ll cover in the next article.

Discover how Rank Ranger can enhance your business

All the data in insights you need to dominate the SERPs